Manage Tableau Server

28.1.2023 | Tableau Server, Tableau Server Client, Tableau Hyper Api

Tableau Server Client (TSC) library increases your productivity as you interact with the Tableau Server REST API. With the TSC library you can do almost everything that you can do with the REST API, including: publish workbooks and data sources, create users and groups, query projects, sites, and more.

The Hyper API contains a set of functions you can use to automate your interactions with Tableau extract (.hyper) files. You can use the API to create new extract files, or to open existing files, and then insert, delete, update, or read data from those files.

The purpose of the excercise was to connect to Tableau Server, publish csv file converted to hyper file and dowload a workbook.

Project structure:

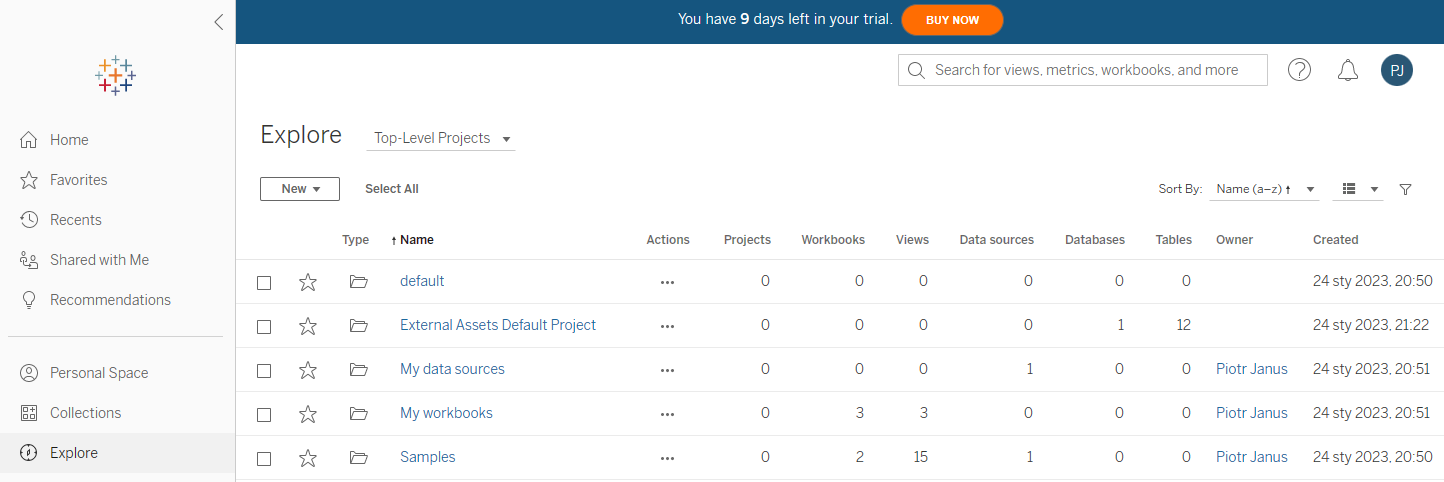

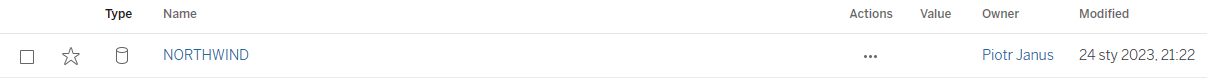

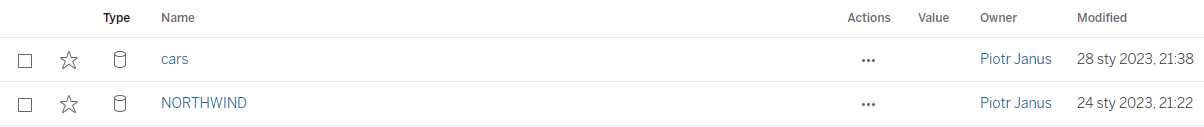

In the sub-project My data sources there is one data source: Northwind. I will add one more called cars.

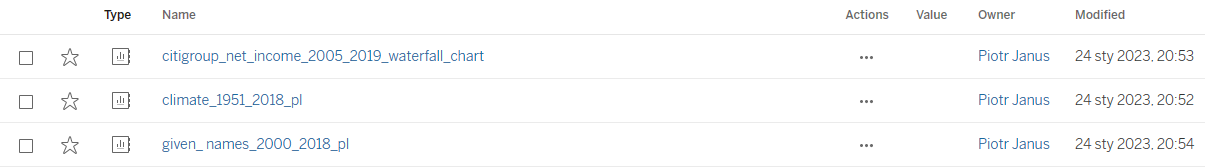

In the sub-project My workbooks there are three workbooks. I will download gven_names_2000_2018_pl

Connection to Tableau Server using a token:

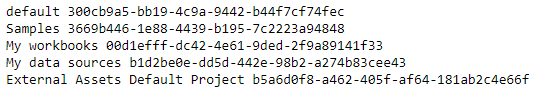

See projects with their ids:

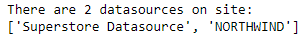

See data sources:

Convert csv to hyper file:

Publish hyper file:

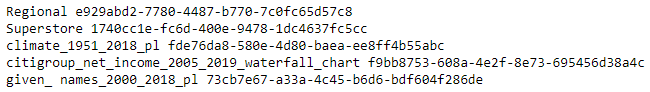

See workbooks with their ids:

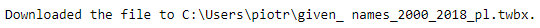

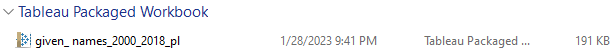

Download workbook:

Updating my website on GitHub using Git

1.1.2023 | Git, GitHub, Command Prompt

I host my website on GitHub. When I add a new post I use Command Prompt and Git commands to do the updates. The following code consists of the following actions:

- create local repo of the GitHub repo

- create a new branch and switch to it

- copy new files to relevant folders

- add to staging area, commit changes

- merge new branch with master branch

- push changes to GitHub

- delete local repo

Superstore flow in SAS

25.10.2022 | Tableau Prep Builder, SAS Studio in SAS OnDemand for Academics

Tableau Prep Builder comes with a tutorial that walks you through creating a flow for Sample Superstore. It introduces you to the common operations that are available in program.

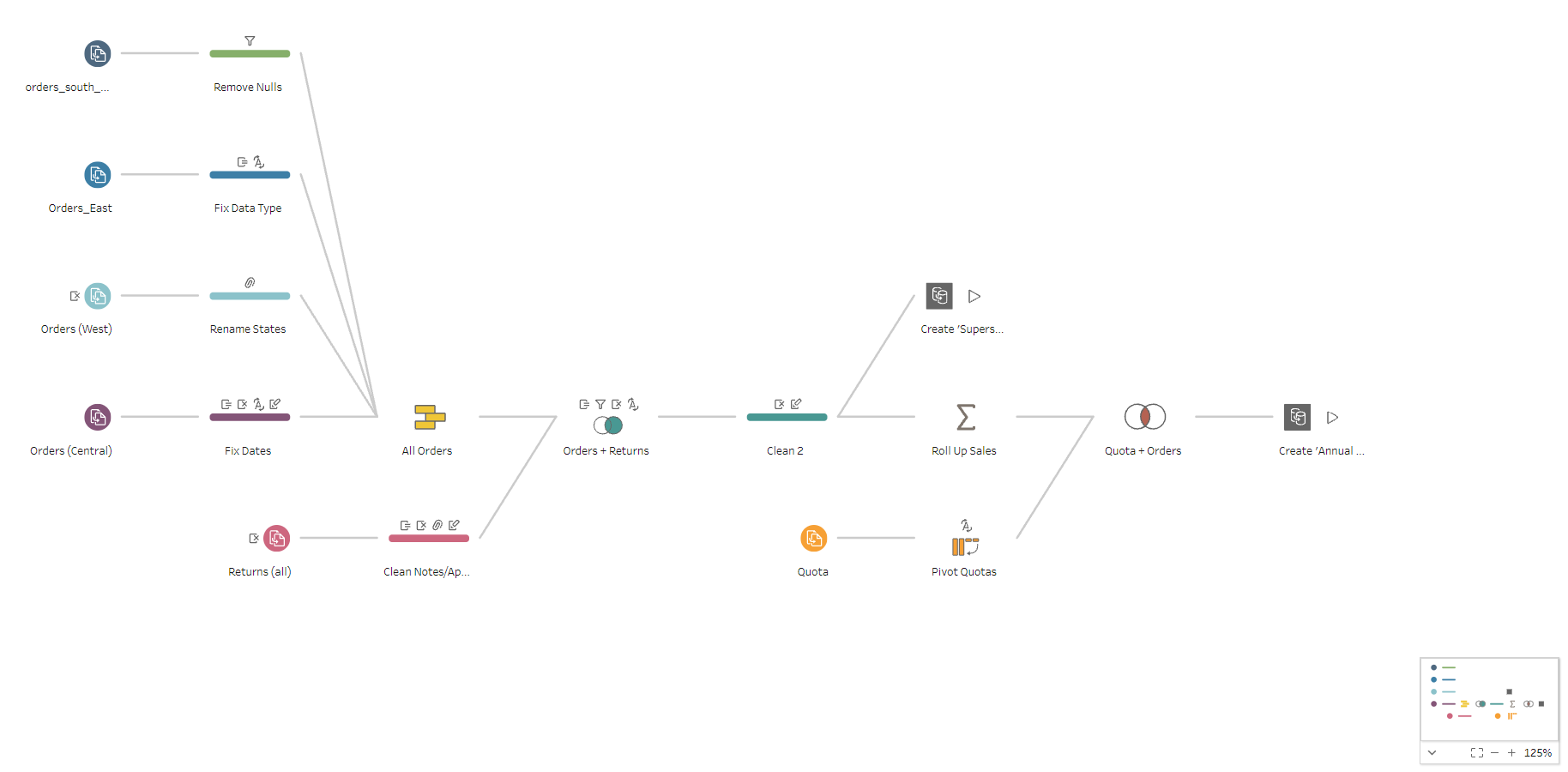

Tableau Prep's Superstore flow:

The purpose of the excercise was to recreate the Prep's tutorial flow using SAS Studio doing it both Non-SQL Base SAS and PROC SQL way.

All the source files were imported to a library called SUPSFLOW

SAS Studio DATA and PROC steps:

SAS Studio PROC SQL queries:

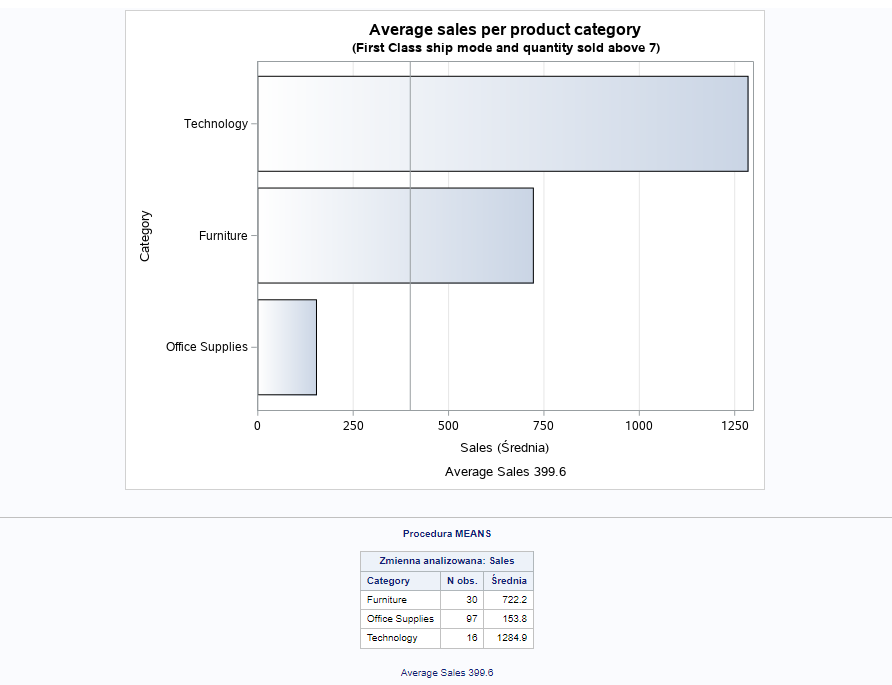

Results in form of chart and summary table:

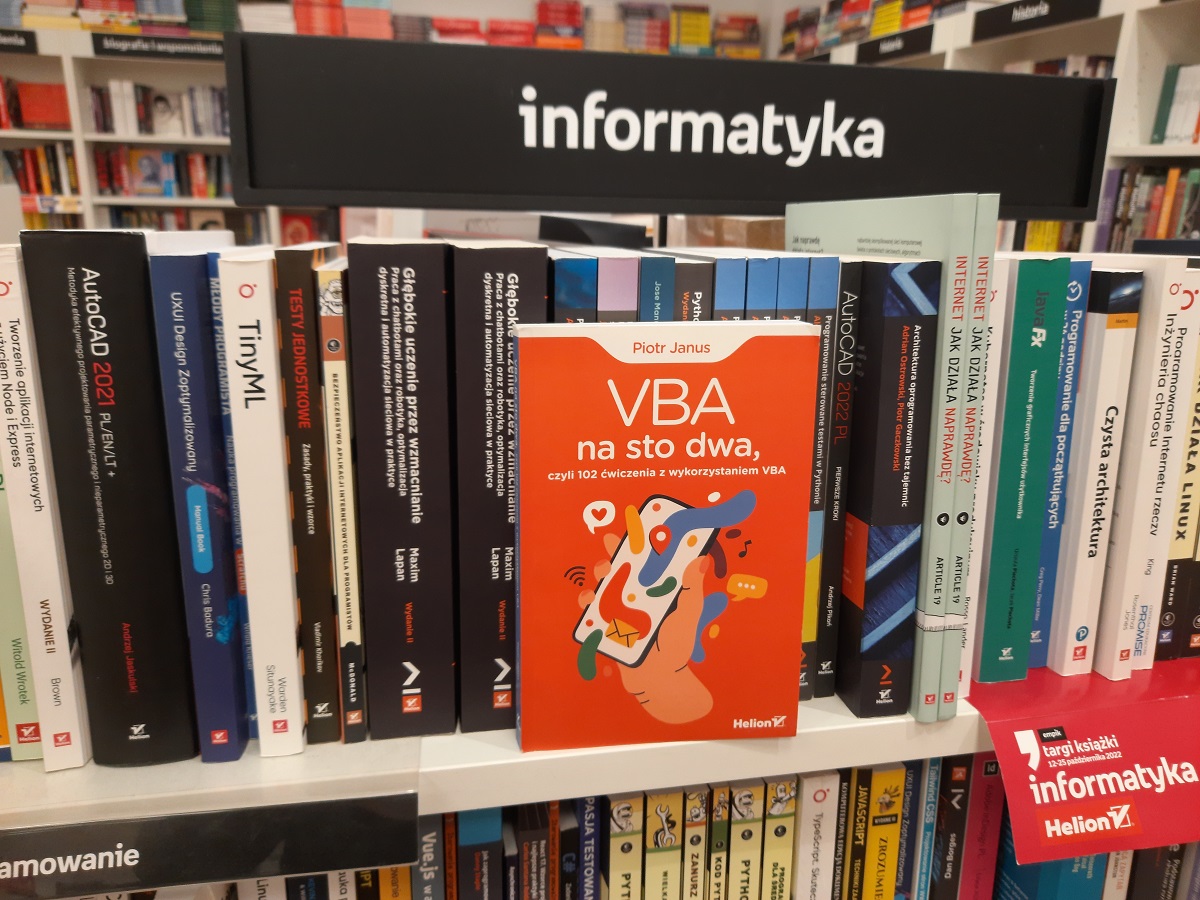

My book: VBA na sto dwa, czyli 102 ćwiczenia z wykorzystaniem VBA

21.10.2022

The idea for the book came to my mind when I was studying the VBA language. At some point I felt that I covered a lot of theory, but I lacked practice. I started to invent more and more tasks myself. The more material I had, the more I considered making it useful to other VBA adepts. I wrote to Helion, a popular IT publishing house in Poland, and they were happy to publish it as a book. Working with Helion on the final product was a great adventure and a real pleasure.

VBA na sto dwa, czyli 102 ćwiczenia z wykorzystaniem VBA appeared in bookshops Jan 2021. So far over 500 copies have been sold. It is something I am really proud of.

Recently, I found the book on a shelf in Empik - a popular polish bookshop:

Index of Economic Freedom using Dash

27.09.2020 | dash, plotly, dash bootstrap components, pandas, heroku, iframe

The purpose of the excersise is to create a multi-tab dashboard showing the results of 2020 Index of Economic Freedom. The index measures economic freedom of 186 countries based on trade freedom, business freedom, investment freedom, and property rights.

The app code:

The css code:

The dataset for the excercise available on Github.

To find out how to publish your app on Heroku I recommned the following tutorial by Adam on the Charming Data channel.

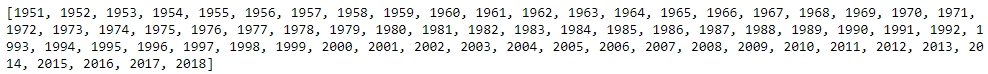

Climate change in Poland

31.01.2020 | urllib.request, BeautifulSoup, pandas, zipfile, io, matplotlib, numpy

Winter 2020 in Warsaw has never been warmer. No snow and temperatures above 10°C are common. Looks like the global warming has become a fact.

Climate changes inspired me to track the average yearly temperatures in different places in Poland. The timespan is the early 50s of the 20th century till 2018. The yearly temperatures are calculated as an average of daily average temperatures.

The source of the data is the Polish Institute of Meteorology and Water Management - National Research Institute which is an official body collecting climate data in Poland.

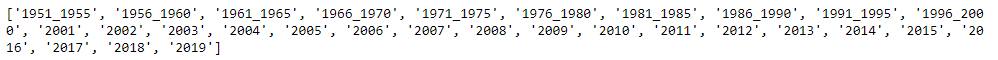

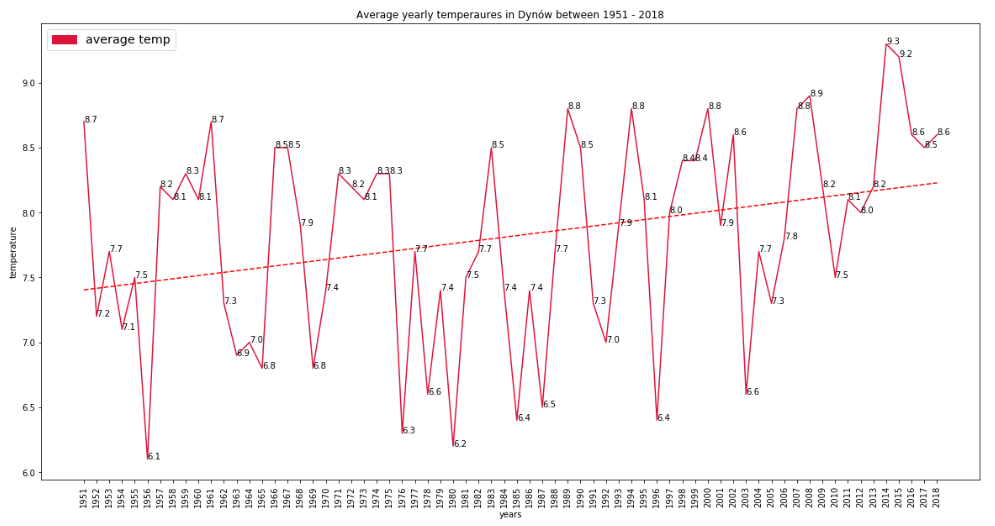

At first, let's parse a list of years:

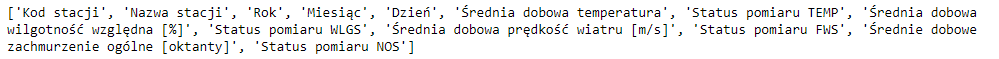

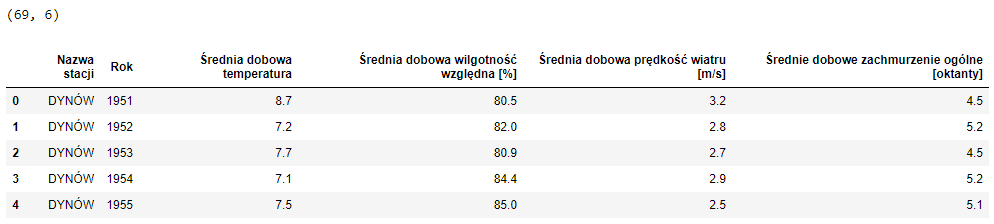

Get the column names:

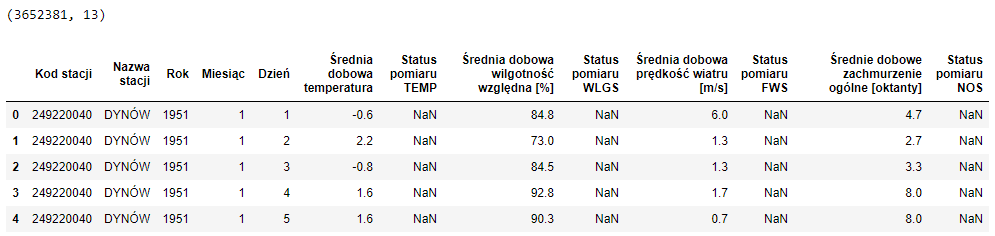

Get the climate data:

Save the data in the csv file compressed in the gzip.

Load the data from the csv file compressed in the gzip.

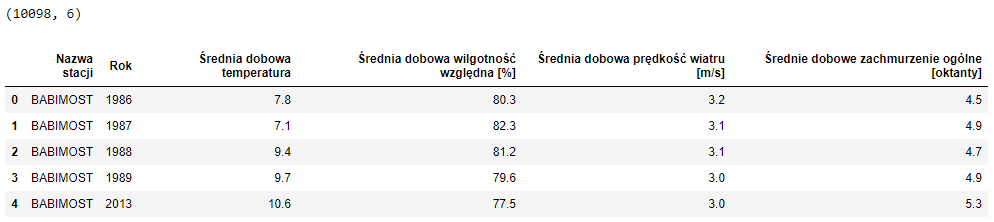

Sort the data by year, station, month and day. Next, group it by station and year.

Save the file in the xlsx format that will be used for visualizations.

Tableau viz (mobile version):

Visualization in Python using matplotlib:

Load the xlsx file and limit the data to Dynów. In this city, located in Subcarpathian Voivodeship, the temperature has been measured since 1951.

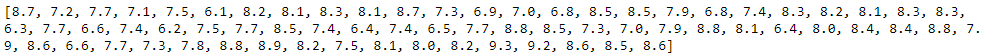

Create the list of yearly averge temperatures for Dynów. 2019 hasn't been included because at the time the code was exectuded the data for December 2019 wasn't available:

The same for years:

Plot the map:

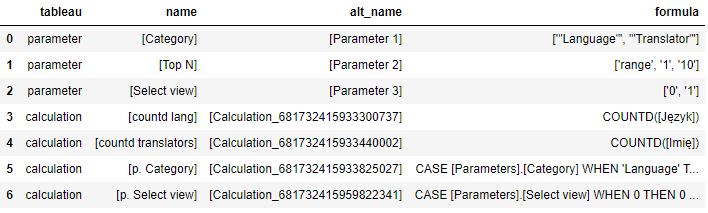

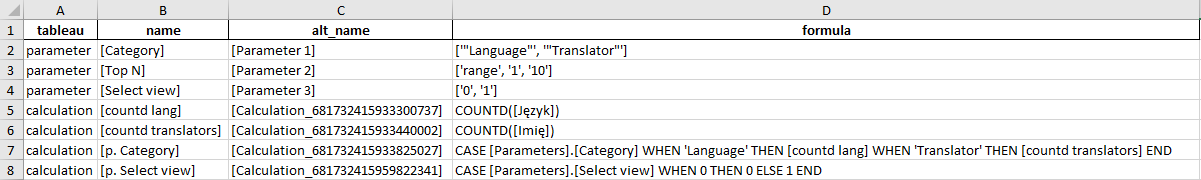

Tableau calculations and parameters export

5.12.2019 | Tableau, xml.etree.ElementTree, re, pandas

Sometimes it is required to export a list of parameters and calculations along with their respective values and formulas. As a developer, I was asked for that as a part of a data governance process. Unfortunately, such action is not supported in Tableau. That is why I created the following piece of code.

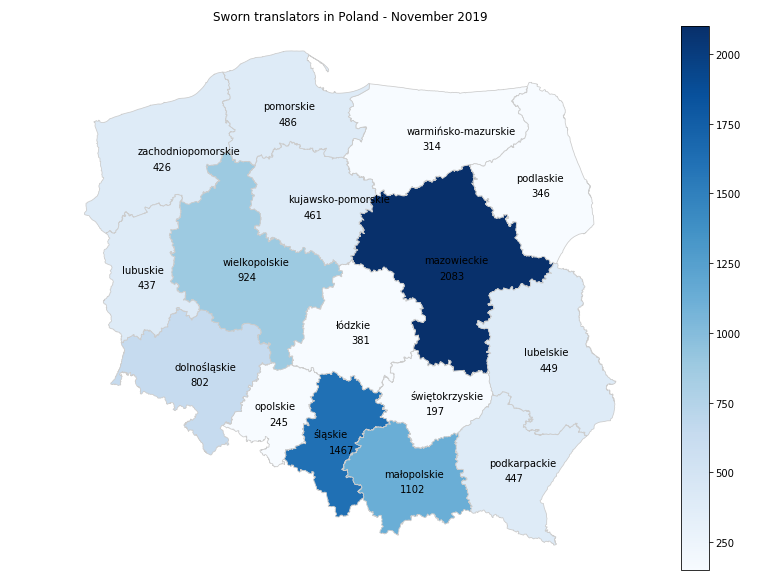

As an example I've used the Sworn Translators Tableau dashboard which was the result of one of my earlier projects:

Dataframe:

Excel file:

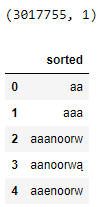

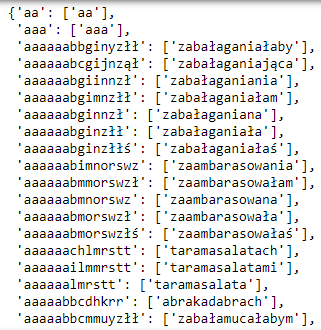

Scrabble assistant

16.11.2019 | pandas, zipfile, urllib.request, pickle, datetime, gzip, itertools, string

Scrabble is my favourite word game. I have always wondered how many bingos, bonus 50 points for using all the 7 letters in the rack, I was able to achieve during one game. My personal best was 2 bingos until I realized I could get a little help. Thanks to Python I managed to get 5 bingos. However, I wasn't familiar with any of the bingo words!

The aim of the project is to help gain advantage in Scrabble. The code allows you to check the Polish words you can create out of the letters in your hand.

The source file is the official wordlist available for word games and published by Słownik Języka Polskiego PWN (Polish Language Dictionary). The name of the source file changes periodically so visit the site and make sure you type in the correct one.

The process of executing the code may last a while since the list contains 3 million words.

The first step of the project is to import the list of available words and sort the letters of every word alphabetically. Next, save the file in a compressed gzip format.

The next step is to create a dictionary where keys are sorted words and value or values are words that can be made up of the letters in a key.

Load the dictionary.

Use the following code to type letters and generate desired Scrabble words.

Examples:

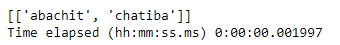

'aabchit' - 7-letter words:

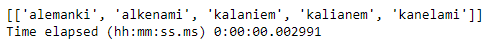

'aaeiklmn' - 8-letter words:

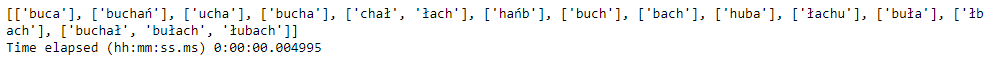

'uńchałb' - 4 to 7-letter words:

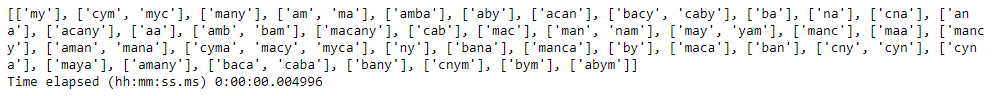

'aabcmny' - 2 to 7-letter words:

Sworn translators web scraping

3.11.2019 | requests, BeautifulSoup, pandas, Tableau, matplotlib, geopandas

The purpose of the excersise is to scrap the data from the website of the Polish Ministry of Justice and to present the results on a map chart using both matplotlib and Tableau.

The object of the web scraping are sworn translators. In Poland, it is a group of professionals that are authorized by the government to translate official documents.

The list is being updated periodically, therefore the results of the exercise may differ.

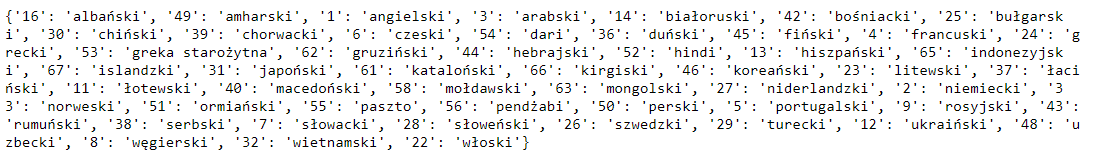

Montenegrin has no results. It was excluded from the web scraping.

The dictionary of the langueages and sample results:

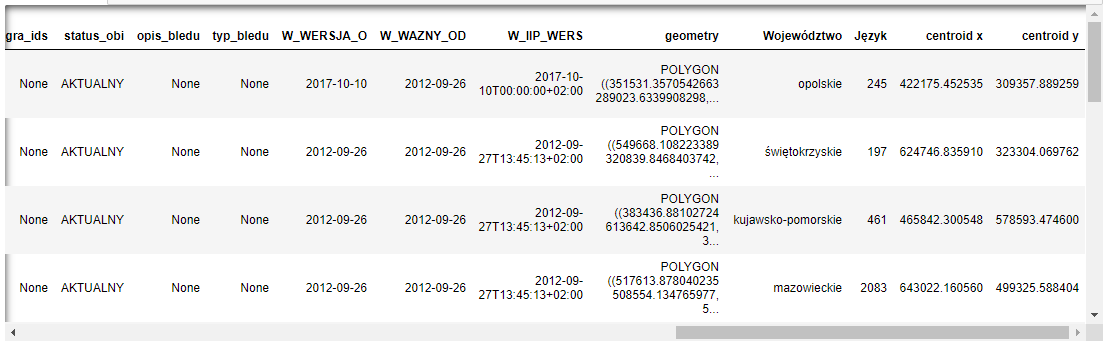

Dataframe shaping:

Dataframe:

Tableau viz (mobile version):

Visualization in Python using geopandas and matplotlib:

Step 1. Import the Polish voivodeship shapefile, the sworn translators data and get the centroids of the polygons.

Step 2. Plot the map:

PostgreSQL database

24.10.2019 | psycopg2, pandas, SQL queries

The purpose of the excersise is to connect and query a postgreSQL database.

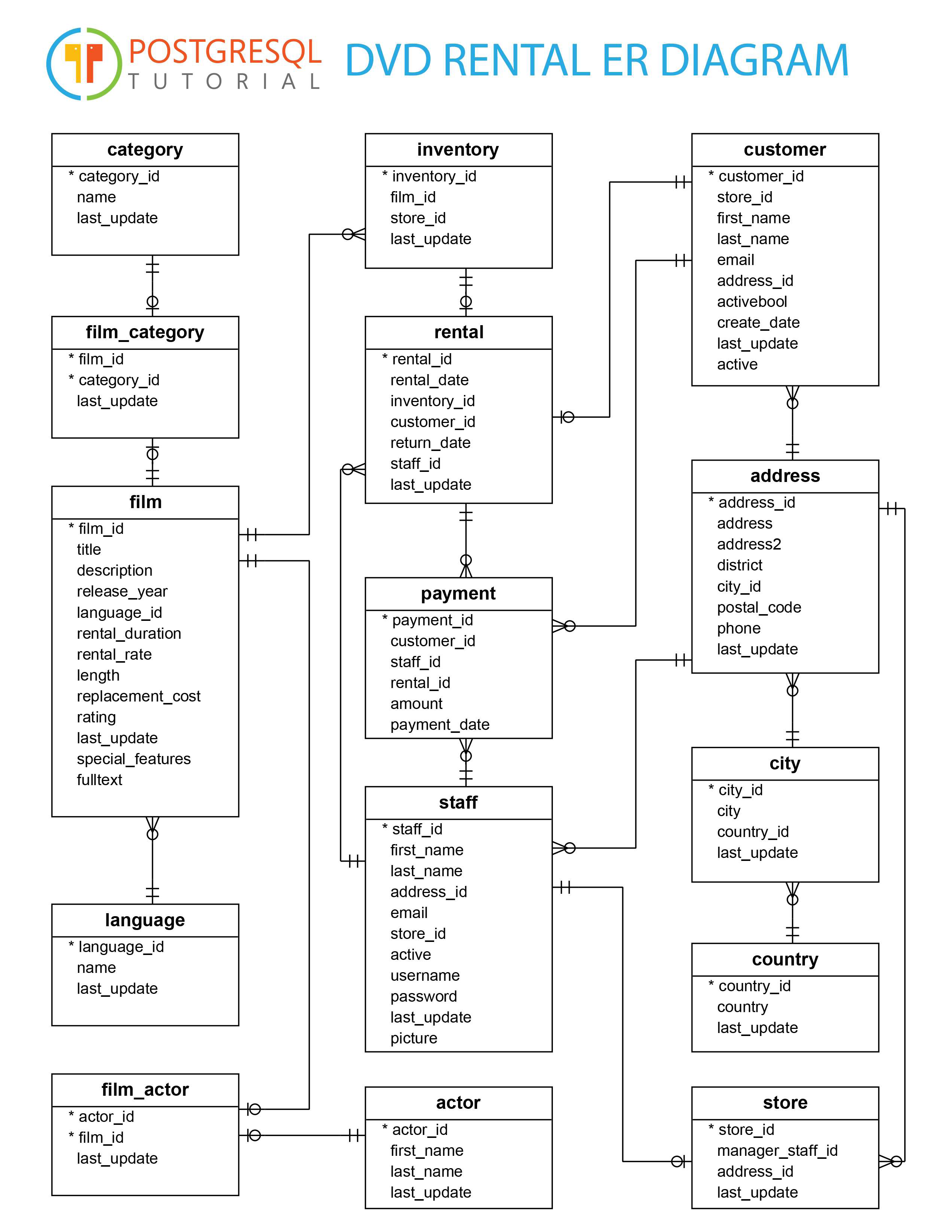

First, install PostgreSQL on your local system. Next, download and connect to the DVD rental database, which is a common sample database for PostgreSQL used for practice. Follow the instructions on the POSTGRESQL TUTORIAL website to complete the above steps. Finally, install psycopg2 package, e.g. in your Anaconda Prompt terminal.

DVD Rental ER Model

Once all set up, use the following code to connect to the database:

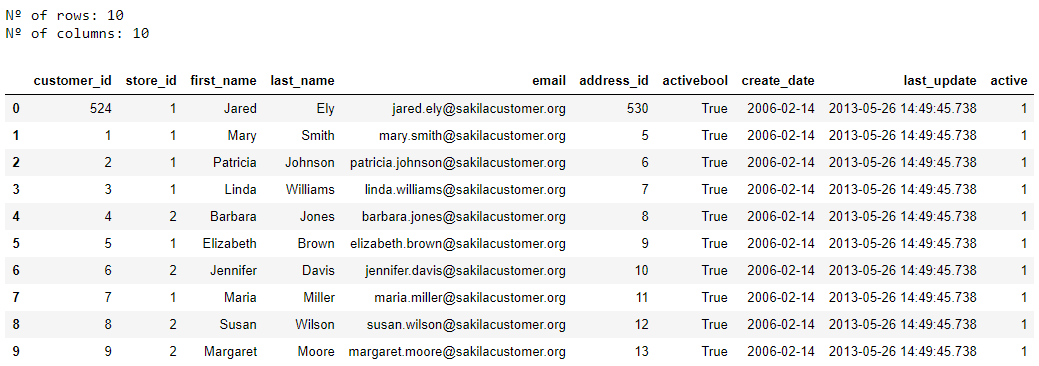

The code returns the result of the query in a dataframe structure. Apart from that it returns the number of columns and rows of the table. The HTML display part extends the view in the notebook to 100%. Uncomment the last line to save your query's results in the Excel file.

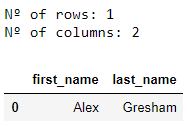

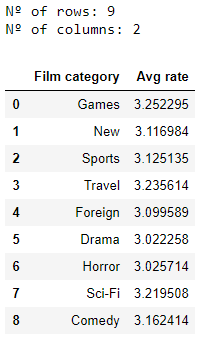

The result of the query:

Useful queries:

List all the tables and views from the dvdrental.

Find all the columns that contain a specific word.

Get the types of the data in the table.

Excercises to practice:

Excercise 1

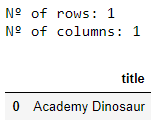

Find the title of the film starring Penelope Guiness, Warren Nolte and Rock Dukakis.

Possible solution:

Excercise 2

Find the first name and the last name of the most profitable customer in April 2007.

Possible solution:

Excercise 3

Find the film categories where the average rental rate is above the average rental rate for all the films.

Possible solution:

Geocoordinates with geopy

13.09.2019 | geopy, pandas

The purpose of the excersise is to identify geographical coordinates for the list of Polish cities.

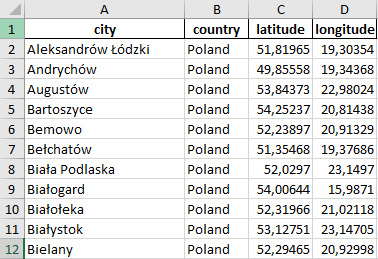

Get the list of the cities:

Use geopy to locate the coordinates of the cities. Bear in mind that geopy allows a limited amount of results to return from the service. Too many queries will result in time out error. Luckily, the limit is high enough to get the list of the cities of our interest for the exercise.

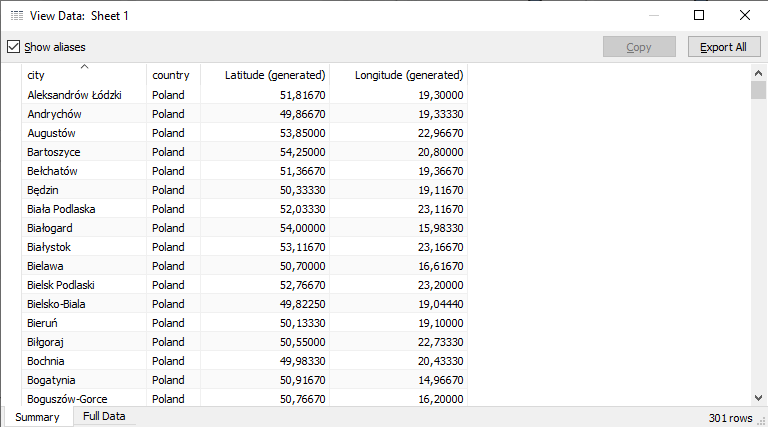

First 12 rows:

The results that we have are not perfect. The final stage of the task is to remove the results that don't interest us, e.g. non-Polish cities or districts of Warsaw.

Removed rows:

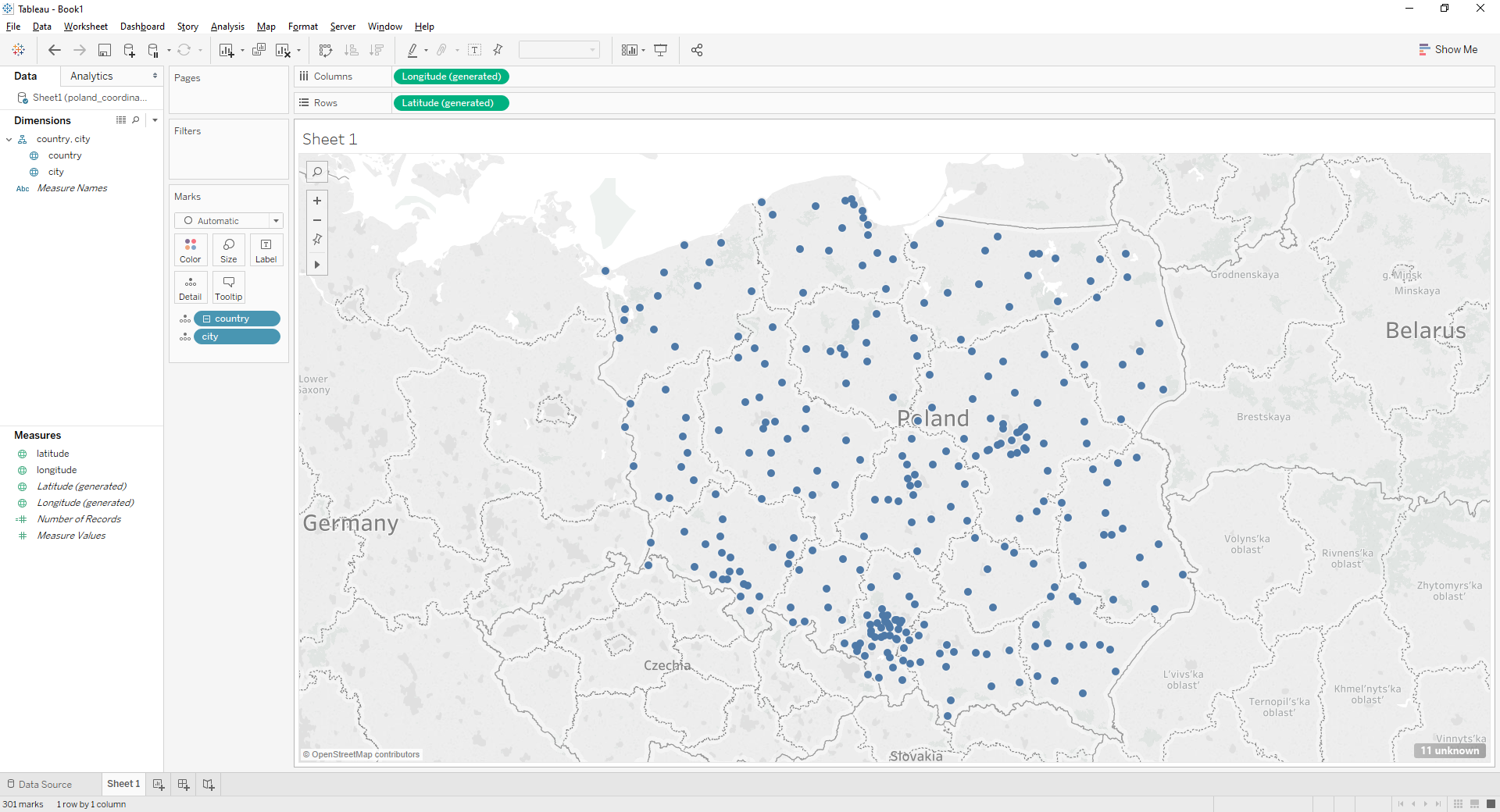

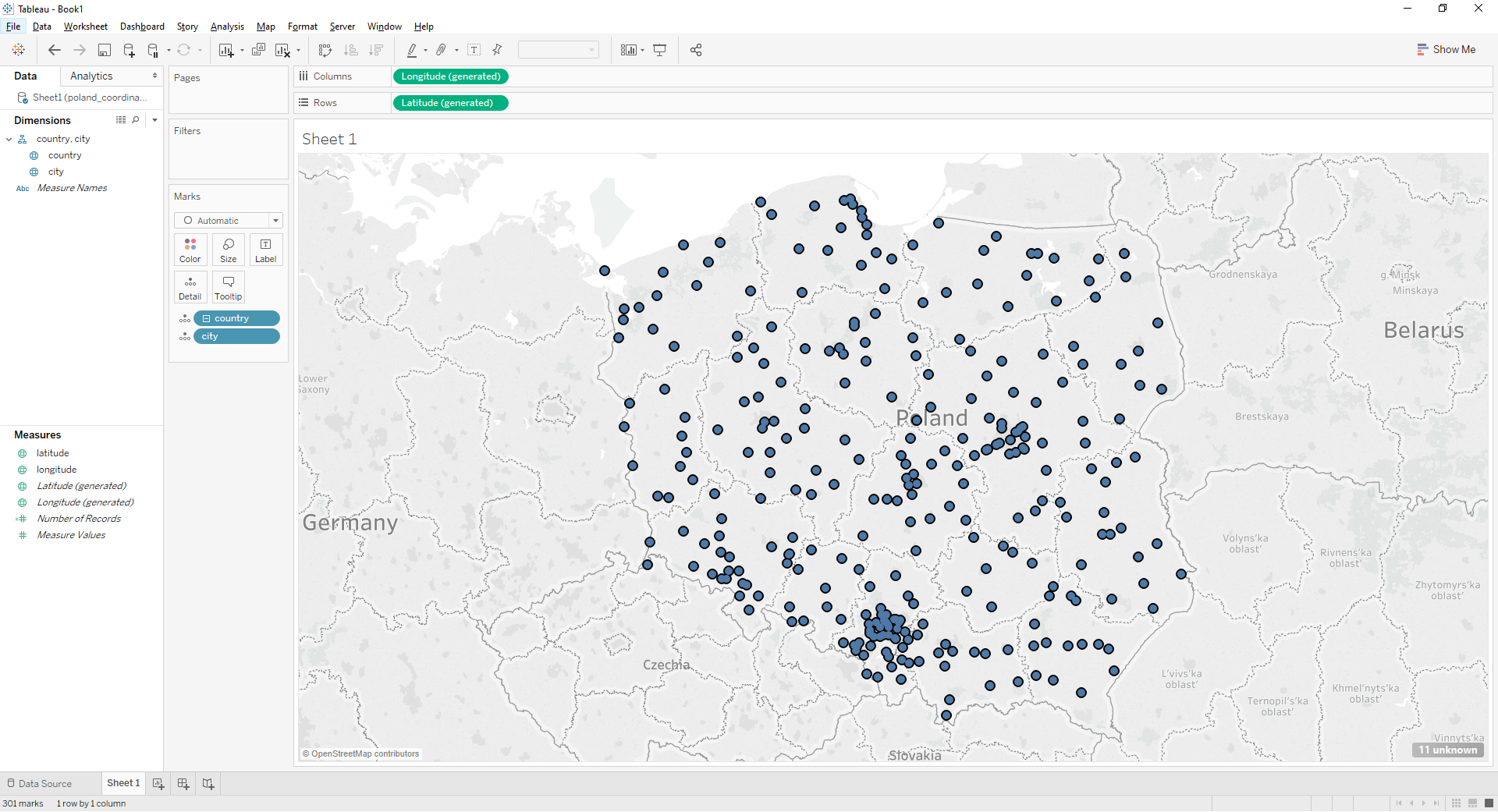

Tableau can also be helpful to identify the geocoordinates of locations. Let's load our poland_coordinates.xlsx file to the application. Next, let's put the cities on the map.

Press Ctr + A to select all the cities on the map.

Select View Data and Export All. Voila, the latitude and the longitude generated by the Tableau libraries.

Business intelligence tools in job offers

17.08.2019 | requests, BeautifulSoup, pandas, time, regular expression operations (re), Tableau

Before you invest your time in learning a new skill you would often like to know if it is demanded on the market. For example, which business intelligence tool is the most commonly used? To find the answer you need to analyse the job market.

Pracuj.pl is the biggest and the most popular job offer portal in Poland. The old job advertisements are stored in the archives and are publically available.

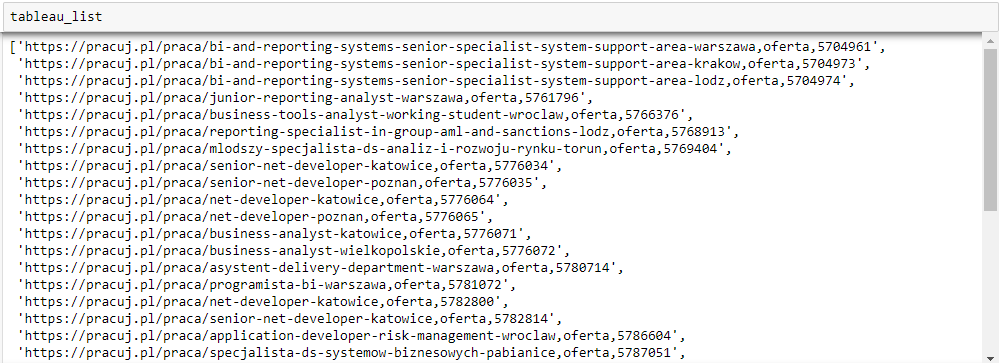

The purpose of the excercise is to webscrap the archive of Pracuj.pl and compare the popularity of the selected business intelligence tools. The key words that were used were: 'Tableau', 'Power Bi" and 'Qlik'. The data collection period was January - April 2019.

There are around 1000 pages each month including 50 job offers per page. That is why the webscarping process is time-consuming.